This FAQ is for Open MPI v4.x and earlier.

If you are looking for documentation for Open MPI v5.x and later, please visit docs.open-mpi.org.

Table of contents:

- What Open MPI components support InfiniBand / RoCE / iWARP?

- What component will my OpenFabrics-based network use by default?

- Does Open MPI support iWARP?

- Does Open MPI support RoCE (RDMA over Converged Ethernet)?

- I have an OFED-based cluster; will Open MPI work with that?

- Where do I get the OFED software from?

- Isn't Open MPI included in the OFED software package? Can I install another copy of Open MPI besides the one that is included in OFED?

- What versions of Open MPI are in OFED?

- Why are you using the name "openib" for the BTL name?

- Is the mVAPI-based BTL still supported?

- How do I specify to use the OpenFabrics network for MPI messages? (openib BTL)

- But wait — I also have a TCP network. Do I need to explicitly

disable the TCP BTL?

- How do I know what MCA parameters are available for tuning MPI performance?

- I'm experiencing a problem with Open MPI on my OpenFabrics-based network; how do I troubleshoot and get help?

- What is "registered" (or "pinned") memory?

- I'm getting errors about "error registering openib memory";

what do I do? (openib BTL)

- How can a system administrator (or user) change locked memory limits?

- I'm still getting errors about "error registering openib memory"; what do I do? (openib BTL)

- Open MPI is warning me about limited registered memory; what does this mean?

- I'm using Mellanox ConnectX HCA hardware and seeing terrible

latency for short messages; how can I fix this?

- How much registered memory is used by Open MPI? Is there a way to limit it? (openib BTL)

- How do I get Open MPI working on Chelsio iWARP devices? (openib BTL)

- I'm getting "ibv_create_qp: returned 0 byte(s) for max inline

data" errors; what is this, and how do I fix it? (openib BTL)

- My bandwidth seems [far] smaller than it should be; why? Can this be fixed? (openib BTL)

- How do I tune small messages in Open MPI v1.1 and later versions? (openib BTL)

- How do I tune large message behavior in Open MPI the v1.2 series? (openib BTL)

- How do I tune large message behavior in the Open MPI v1.3 (and later) series? (openib BTL)

- How does the

mpi_leave_pinned parameter affect

large message transfers? (openib BTL)

- How does the

mpi_leave_pinned parameter affect

memory management? (openib BTL)

- How does the

mpi_leave_pinned parameter affect

memory management in Open MPI v1.2? (openib BTL)

- How does the

mpi_leave_pinned parameter affect

memory management in Open MPI v1.3? (openib BTL)

- How can I set the

mpi_leave_pinned MCA parameter? (openib BTL)

- I got an error message from Open MPI about not using the

default GID prefix. What does that mean, and how do I fix it? (openib BTL)

- What subnet ID / prefix value should I use for my OpenFabrics networks?

- How do I set my subnet ID?

- In a configuration with multiple host ports on the same fabric, what connection pattern does Open MPI use? (openib BTL)

- I'm getting lower performance than I expected. Why?

- I get bizarre linker warnings / errors / run-time faults when

I try to compile my OpenFabrics MPI application statically. How do I

fix this?

- Can I use

system(), popen(), or fork() in an MPI application that uses the OpenFabrics support? (openib BTL)

- My MPI application sometimes hangs when using the

openib BTL; how can I fix this? (openib BTL)

- Does InfiniBand support QoS (Quality of Service)?

- Does Open MPI support InfiniBand clusters with torus/mesh topologies? (openib BTL)

- How do I tell Open MPI which IB Service Level to use? (openib BTL)

- How do I tell Open MPI which IB Service Level to use? (UCX PML)

- What is RDMA over Converged Ethernet (RoCE)?

- How do I run Open MPI over

RoCE? (openib BTL)

- How do I run Open MPI over

RoCE? (UCX PML)

- Does Open MPI support XRC? (openib BTL)

- How do I specify the type of receive queues that I want Open MPI to use? (openib BTL)

- Does Open MPI support

FCA?

- Does Open MPI support

MXM?

- Does Open MPI support

UCX?

- I'm getting errors about "initializing an OpenFabrics device" when running v4.0.0 with UCX support enabled. What should I do?

- How can I find out what devices and transports are supported by UCX on my system?

- What is

cpu-set?

- Does Open MPI support connecting hosts from different subnets? (openib BTL)

| 1. What Open MPI components support InfiniBand / RoCE / iWARP? |

In order to meet the needs of an ever-changing networking

hardware and software ecosystem, Open MPI's support of InfiniBand,

RoCE, and iWARP has evolved over time.

Here is a summary of components in Open MPI that support InfiniBand,

RoCE, and/or iWARP, ordered by Open MPI release series:

| Open MPI series |

OpenFabrics support |

| v1.0 series |

openib and mvapi BTLs |

| v1.1 series |

openib and mvapi BTLs |

| v1.2 series |

openib and mvapi BTLs |

| v1.3 / v1.4 series |

openib BTL |

| v1.5 / v1.6 series |

openib BTL, mxm MTL, fca coll |

| v1.7 / v1.8 series |

openib BTL, mxm MTL, fca and ml and hcoll coll |

| v2.x series |

openib BTL, yalla (MXM) PML, ml and hcoll coll |

| v3.x series |

openib BTL, ucx and yalla (MXM) PML, hcoll coll |

| v4.x series |

openib BTL, ucx PML, hcoll coll, ofi MTL |

History / notes:

- The

openib BTL uses the OpenFabrics Alliance's (OFA) verbs

API stack to support InfiniBand, RoCE, and iWARP devices. The OFA's

original name was "OpenIB", which is why the BTL is named

openib.

- Before the verbs API was effectively standardized in the OFA's

verbs stack, Open MPI supported Mellanox VAPI in the

mvapi module.

The MVAPI API stack has long-since been discarded, and is no longer

supported after Open MPI the v1.2 series.

- The next-generation, higher-abstraction API for support

InfiniBand and RoCE devices is named UCX. As of Open MPI v1.4, the

ucx PML is the preferred mechanism for utilizing InfiniBand and RoCE

devices. As of UCX v1.8, iWARP is not supported. See this FAQ entry for more information about

iWARP.

| 2. What component will my OpenFabrics-based network use by default? |

Per this FAQ item,

OpenFabrics-based networks have generally used the openib BTL for

native verbs-based communication for MPI point-to-point

communications. Because of this history, many of the questions below

refer to the openib BTL, and are specifically marked as such.

The following are exceptions to this general rule:

- In the v2.x and v3.x series, Mellanox InfiniBand devices

defaulted to MXM-based components (e.g.,

mxm and/or yalla).

- In the v4.0.x series, Mellanox InfiniBand devices default to the

ucx PML. The use of InfiniBand over the openib BTL is

officially deprecated in the v4.0.x series, and is scheduled to

be removed in Open MPI v5.0.0.

That being said, it is generally possible for any OpenFabrics device

to use the openib BTL or the ucx PML:

- To make the

openib BTL use InfiniBand in v4.0.x, set the

btl_openib_allow_ib parameter to 1.

- See this FAQ item for information about

using the

ucx PML with arbitrary OpenFabrics devices.

| 3. Does Open MPI support iWARP? |

iWARP is fully supported via the openib BTL as of the Open

MPI v1.3 release.

Note that the openib BTL is scheduled to be removed from Open MPI

starting with v5.0.0. After the openib BTL is removed, support for

iWARP is murky, at best. As of June 2020 (in the v4.x series), there

are two alternate mechanisms for iWARP support which will likely

continue into the v5.x series:

- The

cm PML with the ofi MTL. This mechanism is actually

designed for networks that natively support "MPI-style matching",

which iWARP does not support. Hence, Libfabric adds in a layer of

software emulation to provide this functionality. This slightly

decreases Open MPI's performance on iWARP networks. That being said,

it seems to work correctly.

- The

ofi BTL. A new/prototype BTL named ofi is being

developed (and can be used in place of the openib BTL); it uses

Libfabric to directly access the native iWARP device functionality --

without the software emulation performance penality from using the

"MPI-style matching" of the cm PML + ofi MTL combination.

However, the ofi BTL is neither widely tested nor fully developed.

As of June 2020, it did not work with iWARP, but may be updated in the

future.

This state of affairs reflects that the iWARP vendor community is not

involved with Open MPI; we therefore have no one who is actively

developing, testing, or supporting iWARP users in Open MPI. If anyone

is interested in helping with this situation, please let the Open MPI

developer community know.

NOTE: A prior version of this FAQ entry stated that iWARP support

was available through the ucx PML. That was incorrect. As of UCX

v1.8, iWARP is not supported.

| 4. Does Open MPI support RoCE (RDMA over Converged Ethernet)? |

RoCE is fully supported as of the Open MPI v1.4.4 release.

As of Open MPI v4.0.0, the UCX PML is the preferred mechanism for

running over RoCE-based networks. See this FAQ entry for details.

The openib BTL is also available for use with RoCE-based networks

through the v4.x series; see this FAQ

entry for information how to use it. Note, however, that the

openib BTL is scheduled to be removed from Open MPI in v5.0.0.

| 5. I have an OFED-based cluster; will Open MPI work with that? |

Yes.

OFED (OpenFabrics Enterprise Distribution) is basically the release

mechanism for the OpenFabrics software packages. OFED releases are

officially tested and released versions of the OpenFabrics stacks.

| 6. Where do I get the OFED software from? |

The "Download" section of the OpenFabrics web site has

links for the various OFED releases.

Additionally, Mellanox distributes Mellanox OFED and Mellanox-X binary

distributions. Consult with your IB vendor for more details.

| 7. Isn't Open MPI included in the OFED software package? Can I install another copy of Open MPI besides the one that is included in OFED? |

Yes, Open MPI used to be included in the OFED software. And

yes, you can easily install a later version of Open MPI on

OFED-based clusters, even if you're also using the Open MPI that was

included in OFED.

You can simply download the Open MPI version that you want and install

it to an alternate directory from where the OFED-based Open MPI was

installed. You therefore have multiple copies of Open MPI that do not

conflict with each other. Make sure you set the PATH and

LD_LIBRARY_PATH variables to point to exactly one of your Open MPI

installations at a time, and never try to run an MPI executable

compiled with one version of Open MPI with a different version of Open

MPI.

Ensure to specify to build Open MPI with OpenFabrics support; see this FAQ item for more

information.

| 8. What versions of Open MPI are in OFED? |

The following versions of Open MPI shipped in OFED (note that

OFED stopped including MPI implementations as of OFED 1.5):

- OFED 1.4.1: Open MPI v1.3.2.

- OFED 1.4: Open MPI v1.2.8.

- OFED 1.3.1: Open MPI v1.2.6.

- OFED 1.3: Open MPI v1.2.5.

- OFED 1.2: Open MPI v1.2.1.

NOTE: A prior version of this

FAQ entry specified that "v1.2ofed" would be included in OFED v1.2,

representing a temporary branch from the v1.2 series that included

some OFED-specific functionality. All of this functionality was

included in the v1.2.1 release, so OFED v1.2 simply included that.

Some public betas of "v1.2ofed" releases were made available, but

this version was never officially released.

- OFED 1.1: Open MPI v1.1.1.

- OFED 1.0: Open MPI v1.1b1.

| 9. Why are you using the name "openib" for the BTL name? |

Before the iWARP vendors joined the OpenFabrics Alliance, the

project was known as OpenIB. Open MPI's support for this software

stack was originally written during this timeframe — the name of the

group was "OpenIB", so we named the BTL openib.

Since then, iWARP vendors joined the project and it changed names to

"OpenFabrics". Open MPI did not rename its BTL mainly for

historical reasons — we didn't want to break compatibility for users

who were already using the openib BTL name in scripts, etc.

| 10. Is the mVAPI-based BTL still supported? |

Yes, but only through the Open MPI v1.2 series; mVAPI support

was removed starting with v1.3.

The mVAPI support is an InfiniBand-specific BTL (i.e., it will not

work in iWARP networks), and reflects a prior generation of

InfiniBand software stacks.

The Open MPI team is doing no new work with mVAPI-based networks.

Generally, much of the information contained in this FAQ category

applies to both the OpenFabrics openib BTL and the mVAPI mvapi BTL

— simply replace openib with mvapi to get similar results.

However, new features and options are continually being added to the

openib BTL (and are being listed in this FAQ) that will not be

back-ported to the mvapi BTL. So not all openib-specific items in

this FAQ category will apply to the mvapi BTL.

All that being said, as of Open MPI v4.0.0, the use of InfiniBand over

the openib BTL is deprecated — the UCX PML

is the preferred way to run over InfiniBand.

| 11. How do I specify to use the OpenFabrics network for MPI messages? (openib BTL) |

In general, you specify that the openib BTL

components should be used. However, note that you should also

specify that the self BTL component should be used. self is for

loopback communication (i.e., when an MPI process sends to itself),

and is technically a different communication channel than the

OpenFabrics networks. For example:

1

| shell$ mpirun --mca btl openib,self ... |

Failure to specify the self BTL may result in Open MPI being unable

to complete send-to-self scenarios (meaning that your program will run

fine until a process tries to send to itself).

Note that openib,self is the minimum list of BTLs that you might

want to use. It is highly likely that you also want to include the

vader (shared memory) BTL in the list as well, like this:

1

| shell$ mpirun --mca btl openib,self,vader ... |

NOTE: Prior versions of Open MPI used an sm BTL for

shared memory. sm was effectively replaced with vader starting in

Open MPI v3.0.0.

See this FAQ

entry for more details on selecting which MCA plugins are used at

run-time.

Finally, note that if the openib component is available at run time,

Open MPI should automatically use it by default (ditto for self).

Hence, it's usually unnecessary to specify these options on the

mpirun command line. They are typically only used when you want to

be absolutely positively definitely sure to use the specific BTL.

| 12. But wait — I also have a TCP network. Do I need to explicitly

disable the TCP BTL? |

No. See this FAQ entry for more details.

| 13. How do I know what MCA parameters are available for tuning MPI performance? |

The ompi_info command can display all the parameters

available for any Open MPI component. For example:

1

2

3

4

5

6

7

8

9

| # Note that Open MPI v1.8 and later will only show an abbreviated list

# of parameters by default. Use "--level 9" to show all available

# parameters.

# Show the UCX PML parameters

shell$ ompi_info --param pml ucx --level 9

# Show the openib BTL parameters

shell$ ompi_info --param btl openib --level 9 |

| 14. I'm experiencing a problem with Open MPI on my OpenFabrics-based network; how do I troubleshoot and get help? |

In order for us to help you, it is most helpful if you can

run a few steps before sending an e-mail to both perform some basic

troubleshooting and provide us with enough information about your

environment to help you. Please include answers to the following

questions in your e-mail:

- Which Open MPI component are you using? Possibilities include:

the

ucx PML, the yalla PML, the mxm MTL, the openib BTL.

- Which OpenFabrics version are you running? Please specify where

you got the software from (e.g., from the OpenFabrics community web

site, from a vendor, or it was already included in your Linux

distribution).

- What distro and version of Linux are you running? What is your

kernel version?

- Which subnet manager are you running? (e.g., OpenSM, a

vendor-specific subnet manager, etc.)

- What is the output of the

ibv_devinfo command on a known

"good" node and a known "bad" node? (NOTE: there

must be at least one port listed as "PORT_ACTIVE" for Open MPI to

work. If there is not at least one PORT_ACTIVE port, something is

wrong with your OpenFabrics environment and Open MPI will not be able

to run).

- What is the output of the

ifconfig command on a known "good"

node and a known "bad" node? (Mainly relevant for IPoIB

installations.) Note that some Linux distributions do not put

ifconfig in the default path for normal users; look for it at

/sbin/ifconfig or /usr/sbin/ifconfig.

- If running under Bourne shells, what is the output of the [ulimit

-l] command?

If running under C shells, what is the output of

the limit | grep memorylocked command?

(NOTE: If the value is not "unlimited", see this FAQ entry and this FAQ entry).

Gather up this information and see

this page about how to submit a help request to the user's mailing

list.

| 15. What is "registered" (or "pinned") memory? |

"Registered" memory means two things:

- The memory has been "pinned" by the operating system such that

the virtual memory subsystem will not relocate the buffer (until it

has been unpinned).

- The network adapter has been notified of the virtual-to-physical

address mapping.

These two factors allow network adapters to move data between the

network fabric and physical RAM without involvement of the main CPU or

operating system.

Note that many people say "pinned" memory when they actually mean

"registered" memory.

However, a host can only support so much registered memory, so it is

treated as a precious resource. Additionally, the cost of registering

(and unregistering) memory is fairly high. Open MPI takes aggressive

steps to use as little registered memory as possible (balanced against

performance implications, of course) and mitigate the cost of

registering and unregistering memory.

| 16. I'm getting errors about "error registering openib memory";

what do I do? (openib BTL) |

With OpenFabrics (and therefore the openib BTL component),

you need to set the available locked memory to a large number (or

better yet, unlimited) — the defaults with most Linux installations

are usually too low for most HPC applications that utilize

OpenFabrics. Failure to do so will result in a error message similar

to one of the following (the messages have changed throughout the

release versions of Open MPI):

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

| WARNING: It appears that your OpenFabrics subsystem is configured to only

allow registering part of your physical memory. This can cause MPI jobs to

run with erratic performance, hang, and/or crash.

This may be caused by your OpenFabrics vendor limiting the amount of

physical memory that can be registered. You should investigate the

relevant Linux kernel module parameters that control how much physical

memory can be registered, and increase them to allow registering all

physical memory on your machine.

See this Open MPI FAQ item for more information on these Linux kernel

module parameters:

http://www.linux-pam.org/Linux-PAM-html/sag-pam_limits.html

Local host: node02

Registerable memory: 32768 MiB

Total memory: 65476 MiB

Your MPI job will continue, but may be behave poorly and/or hang. |

1

2

| [node06:xxxx] mca_mpool_openib_register: \

error registering openib memory of size yyyy errno says Cannot allocate memory |

1

2

| [x,y,z][btl_openib.c:812:mca_btl_openib_create_cq_srq] \

error creating low priority cq for mthca0 errno says Cannot allocate memory |

1

2

| libibverbs: Warning: RLIMIT_MEMLOCK is 32768 bytes.

This will severely limit memory registrations. |

1

2

3

4

5

6

7

8

9

10

11

12

| The OpenIB BTL failed to initialize while trying to create an internal

queue. This typically indicates a failed OpenFabrics installation or

faulty hardware. The failure occurred here:

Host: compute_node.example.com

OMPI source: btl_openib.c:828

Function: ibv_create_cq()

Error: Invalid argument (errno=22)

Device: mthca0

You may need to consult with your system administrator to get this

problem fixed. |

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

| The OpenIB BTL failed to initialize while trying to allocate some

locked memory. This typically can indicate that the memlock limits

are set too low. For most HPC installations, the memlock limits

should be set to "unlimited". The failure occurred here:

Host: compute_node.example.com

OMPI source: btl_opebib.c:114

Function: ibv_create_cq()

Device: Out of memory

Memlock limit: 32767

You may need to consult with your system administrator to get this

problem fixed. This FAQ entry on the Open MPI web site may also be

helpful:

http://www.linux-pam.org/Linux-PAM-html/sag-pam_limits.html |

1

| error creating qp errno says Cannot allocate memory |

There are two typical causes for Open MPI being unable to register

memory, or warning that it might not be able to register enough memory:

- Linux kernel module parameters that control the amount of

available registered memory are set too low;

see this FAQ entry.

- System / user needs to increase locked memory limits: see this FAQ entry and this FAQ entry.

| 17. How can a system administrator (or user) change locked memory limits? |

There are two ways to control the amount of memory that a user

process can lock:

- Assuming that the PAM limits module is being used (see

full docs for the Linux PAM limits module, or

this mirror), the system-level

default values are controlled by putting a file in

/etc/security/limits.d/ (or directly editing the

/etc/security/limits.conf file on older systems). Two limits are

configurable:

- Soft: The "soft" value is how much memory is allowed to be

locked by user processes by default. Set it by creating a file in

/etc/security/limits.d/ (e.g., 95-openfabrics.conf) with the line

below (or, if your system doesn't have a /etc/security/limits.d/

directory, add a line directly to /etc/security/limits.conf):

1

| * soft memlock <number> |

where <number> is the number of bytes that you want user

processes to be allowed to lock by default (presumably rounded down to

an integral number of pages). <number> can also be

unlimited.

- Hard: The "hard" value is the maximum amount of memory that a

user process can lock. Similar to the soft lock, add it to the file

you added to

/etc/security/limits.d/ (or editing

/etc/security/limits.conf directly on older systems):

1

| * hard memlock <number> |

where <number> is the maximum number of bytes that you want

user processes to be allowed to lock (presumably rounded down to an

integral number of pages). <number> can also be

unlimited.

- Per-user default values are controlled via the

ulimit command (or

limit in csh). The default amount of memory allowed to be

locked will correspond to the "soft" limit set in

/etc/security/limits.d/ (or limits.conf — see above); users

cannot use ulimit (or limit in csh) to set their amount to be more

than the hard limit in /etc/security/limits.d (or limits.conf).

Users can increase the default limit by adding the following to their

shell startup files for Bourne style shells (sh, bash):

1

| shell$ ulimit -l unlimited |

Or for C style shells (csh, tcsh):

1

| shell% limit memorylocked unlimited |

This effectively sets their limit to the hard limit in

/etc/security/limits.d (or limits.conf). Alternatively, users can

set a specific number instead of "unlimited", but this has limited

usefulness unless a user is aware of exactly how much locked memory they

will require (which is difficult to know since Open MPI manages locked

memory behind the scenes).

It is important to realize that this must be set in all shells where

Open MPI processes using OpenFabrics will be run. For example, if you are

using rsh or ssh to start parallel jobs, it will be necessary to

set the ulimit in your shell startup files so that it is effective

on the processes that are started on each node.

More specifically: it may not be sufficient to simply execute the

following, because the ulimit may not be in effect on all nodes

where Open MPI processes will be run:

1

2

| shell$ ulimit -l unlimited

shell$ mpirun -np 2 my_mpi_application |

| 18. I'm still getting errors about "error registering openib memory"; what do I do? (openib BTL) |

Ensure that the limits you've set (see this FAQ entry) are actually being

used. There are two general cases where this can happen:

- Your memory locked limits are not actually being applied for

interactive and/or non-interactive logins.

- You are starting MPI jobs under a resource manager / job

scheduler that is either explicitly resetting the memory limited or

has daemons that were (usually accidentally) started with very small

memory locked limits.

That is, in some cases, it is possible to login to a node and

not have the "limits" set properly. For example, consider the

following post on the Open MPI User's list:

In this case, the user noted that the default configuration on his

Linux system did not automatically load the pam_limits.so

upon rsh-based logins, meaning that the hard and soft

limits were not set.

There are also some default configurations where, even though the

maximum limits are initially set system-wide in limits.d (or

limits.conf on older systems), something

during the boot procedure sets the default limit back down to a low

number (e.g., 32k). In this case, you may need to override this limit

on a per-user basis (described in this FAQ

entry), or effectively system-wide by putting ulimit -l unlimited

(for Bourne-like shells) in a strategic location, such as:

-

/etc/init.d/sshd (or wherever the script is that starts up your

SSH daemon) and restarting the SSH daemon

- In a script in

/etc/profile.d, or wherever system-wide shell

startup scripts are located (e.g., /etc/profile and

/etc/csh.cshrc)

Also, note that resource managers such as Slurm, Torque/PBS, LSF,

etc. may affect OpenFabrics jobs in two ways:

- Make sure that the resource manager daemons are started with

unlimited memlock limits (which may involve editing the resource

manager daemon startup script, or some other system-wide location that

allows the resource manager daemon to get an unlimited limit of locked

memory).

Otherwise, jobs that are started under that resource manager

will get the default locked memory limits, which are far too small for

Open MPI.

*The files in limits.d (or the limits.conf file) do not usually

apply to resource daemons!* The limits.s files usually only applies

to rsh or ssh-based logins. Hence, daemons usually inherit the

system default of maximum 32k of locked memory (which then gets passed

down to the MPI processes that they start). To increase this limit,

you typically need to modify daemons' startup scripts to increase the

limit before they drop root privliedges.

- Some resource managers can limit the amount of locked

memory that is made available to jobs. For example, Slurm has some

fine-grained controls that allow locked memory for only Slurm jobs

(i.e., the system's default is low memory lock limits, but Slurm jobs

can get high memory lock limits). See these FAQ items on the Slurm

web site for more details: propagating limits and using PAM.

Finally, note that some versions of SSH have problems with getting

correct values from /etc/security/limits.d/ (or limits.conf) when

using privilege separation. You may notice this by ssh'ing into a

node and seeing that your memlock limits are far lower than what you

have listed in /etc/security/limits.d/ (or limits.conf) (e.g., 32k

instead of unlimited). Several web sites suggest disabling privilege

separation in ssh to make PAM limits work properly, but others imply

that this may be fixed in recent versions of OpenSSH.

If you do disable privilege separation in ssh, be sure to check with

your local system administrator and/or security officers to understand

the full implications of this change. See this Google search link for more information.

| 19. Open MPI is warning me about limited registered memory; what does this mean? |

OpenFabrics network vendors provide Linux kernel module

parameters controlling the size of the size of the memory translation

table (MTT) used to map virtual addresses to physical addresses. The

size of this table controls the amount of physical memory that can be

registered for use with OpenFabrics devices.

With Mellanox hardware, two parameters are provided to control the

size of this table:

-

log_num_mtt (on some older Mellanox hardware, the parameter may be

num_mtt, not log_num_mtt): number of memory translation tables

-

log_mtts_per_seg:

The amount of memory that can be registered is calculated using this

formula:

1

2

3

4

5

| In newer hardware:

max_reg_mem = (2^log_num_mtt) * (2^log_mtts_per_seg) * PAGE_SIZE

In older hardware:

max_reg_mem = num_mtt * (2^log_mtts_per_seg) * PAGE_SIZE |

*At least some versions of OFED (community OFED,

Mellanox OFED, and upstream OFED in Linux distributions) set the

default values of these variables FAR too low!* For example, in

some cases, the default values may only allow registering 2 GB — even

if the node has much more than 2 GB of physical memory.

Mellanox has advised the Open MPI community to increase the

log_num_mtt value (or num_mtt value), _not the log_mtts_per_seg

value_ (even though an

IBM article suggests increasing the log_mtts_per_seg value).

It is recommended that you adjust log_num_mtt (or num_mtt) such

that your max_reg_mem value is at least twice the amount of physical

memory on your machine (setting it to a value higher than the amount

of physical memory present allows the internal Mellanox driver tables

to handle fragmentation and other overhead). For example, if a node

has 64 GB of memory and a 4 KB page size, log_num_mtt should be set

to 24 and (assuming log_mtts_per_seg is set to 1). This will allow

processes on the node to register:

1

| max_reg_mem = (2^24) * (2^1) * (4 kB) = 128 GB |

NOTE: Starting with OFED 2.0, OFED's default kernel parameter values

should allow registering twice the physical memory size.

| 20. I'm using Mellanox ConnectX HCA hardware and seeing terrible

latency for short messages; how can I fix this? |

Open MPI prior to v1.2.4 did not include specific

configuration information to enable RDMA for short messages on

ConnectX hardware. As such, Open MPI will default to the safe setting

of using send/receive semantics for short messages, which is slower

than RDMA.

To enable RDMA for short messages, you can add this snippet to the

bottom of the $prefix/share/openmpi/mca-btl-openib-hca-params.ini

file:

[Mellanox Hermon]

vendor_id = 0x2c9,0x5ad,0x66a,0x8f1,0x1708

vendor_part_id = 25408,25418,25428

use_eager_rdma = 1

mtu = 2048 Enabling short message RDMA will significantly reduce short message

latency, especially on ConnectX (and newer) Mellanox hardware.

| 21. How much registered memory is used by Open MPI? Is there a way to limit it? (openib BTL) |

Open MPI uses registered memory in several places, and

therefore the total amount used is calculated by a somewhat-complex

formula that is directly influenced by MCA parameter values.

It can be desirable to enforce a hard limit on how much registered

memory is consumed by MPI applications. For example, some platforms

have limited amounts of registered memory available; setting limits on

a per-process level can ensure fairness between MPI processes on the

same host. Another reason is that registered memory is not swappable;

as more memory is registered, less memory is available for

(non-registered) process code and data. When little unregistered

memory is available, swap thrashing of unregistered memory can occur.

Each instance of the openib BTL module in an MPI process (i.e.,

one per HCA port and LID) will use up to a maximum of the sum of the

following quantities:

| Description |

Amount |

Explanation |

| User memory |

mpool_rdma_rcache_size_limit |

By default Open

MPI will register as much user memory as necessary (upon demand).

However, if mpool_rdma_cache_size_limit is greater than zero, it

is the upper limit (in bytes) of user memory that will be

registered. User memory is registered for ongoing MPI

communications (e.g., long message sends and receives) and via the

MPI_ALLOC_MEM function.

Note that this MCA parameter was introduced in v1.2.1. |

| Internal eager fragment buffers |

2 ×

btl_openib_free_list_max ×

(btl_openib_eager_limit + overhead) |

A "free list" of buffers used in the openib BTL for "eager"

fragments (e.g., the first fragment of a long message). Two free

lists are created; one for sends and one for receives.

By default, btl_openib_free_list_max is -1, and the list size is

unbounded, meaning that Open MPI will try to allocate as many

registered buffers as it needs. If btl_openib_free_list_max is

greater than 0, the list will be limited to this size. Each entry

in the list is approximately btl_openib_eager_limit bytes —

some additional overhead space is required for alignment and

internal accounting. btl_openib_eager_limit is the

maximum size of an eager fragment. |

| Internal send/receive buffers |

2 ×

btl_openib_free_list_max ×

(btl_openib_max_send_size + overhead) |

A "free list" of buffers used for send/receive communication in

the openib BTL. Two free lists are created; one for sends and

one for receives.

By default, btl_openib_free_list_max is -1, and the list size is

unbounded, meaning that Open MPI will allocate as many registered

buffers as it needs. If btl_openib_free_list_max is greater

than 0, the list will be limited to this size. Each entry in the

list is approximately btl_openib_max_send_size bytes — some

additional overhead space is required for alignment and internal

accounting. btl_openib_max_send_size is the maximum

size of a send/receive fragment. |

| Internal "eager" RDMA buffers |

btl_openib_eager_rdma_num ×

btl_openib_max_eager_rdma × (btl_openib_eager_limit

+ overhead) |

If btl_openib_user_eager_rdma is true, RDMA buffers are used

for eager fragments (because RDMA semantics can be faster than

send/receive semantics in some cases), and an additional set of

registered buffers is created (as needed).

Each MPI process will use RDMA buffers for eager fragments up to

btl_openib_eager_rdma_num MPI peers. Upon receiving the

btl_openib_eager_rdma_threshhold'th message from an MPI peer

process, if both sides have not yet setup

btl_openib_eager_rdma_num sets of eager RDMA buffers, a new set

will be created. The set will contain btl_openib_max_eager_rdma

buffers; each buffer will be btl_openib_eager_limit bytes (i.e.,

the maximum size of an eager fragment). |

In general, when any of the individual limits are reached, Open MPI

will try to free up registered memory (in the case of registered user

memory) and/or wait until message passing progresses and more

registered memory becomes available.

Use the ompi_info command to view the values of the MCA parameters

described above in your Open MPI installation:

1

2

3

| # Note that Open MPI v1.8 and later require the "--level 9"

# CLIP option to display all available MCA parameters.

shell$ ompi_info --param btl openib --level 9 |

See this FAQ entry

for information on how to set MCA parameters at run-time.

| 22. How do I get Open MPI working on Chelsio iWARP devices? (openib BTL) |

Please see this FAQ entry for

an important note about iWARP support (particularly for Open MPI

versions starting with v5.0.0).

For the Chelsio T3 adapter, you must have at least OFED v1.3.1 and

Chelsio firmware v6.0. Download the firmware from service.chelsio.com and put the uncompressed t3fw-6.0.0.bin

file in /lib/firmware. Then reload the iw_cxgb3 module and bring

up the ethernet interface to flash this new firmware. For example:

1

2

3

4

5

6

7

8

9

10

11

12

13

| # Note that the URL for the firmware may change over time

shell# cd /lib/firmware

shell# wget http://service.chelsio.com/drivers/firmware/t3/t3fw-6.0.0.bin.gz

[...wget output...]

shell# gunzip t3fw-6.0.0.bin.gz

shell# rmmod iw_cxgb3 cxgb3

shell# modprobe iw_cxgb3

# This last step *may* happen automatically, depending on your

# Linux distro (assuming that the ethernet interface has previously

# been properly configured and is ready to bring up). Substitute the

# proper ethernet interface name for your T3 (vs. ethX).

shell# ifup ethX |

If all goes well, you should see a message similar to the following in

your syslog 15-30 seconds later:

1

| kernel: cxgb3 0000:0c:00.0: successful upgrade to firmware 6.0.0 |

Open MPI will work without any specific configuration to the openib

BTL. Users wishing to performance tune the configurable options may

wish to inspect the receive queue values. Those can be found in the

"Chelsio T3" section of mca-btl-openib-hca-params.ini.

| 23. I'm getting "ibv_create_qp: returned 0 byte(s) for max inline

data" errors; what is this, and how do I fix it? (openib BTL) |

Prior to Open MPI v1.0.2, the OpenFabrics (then known as

"OpenIB") verbs BTL component did not check for where the OpenIB API

could return an erroneous value (0) and it would hang during startup.

Starting with v1.0.2, error messages of the following form are

reported:

1

2

| [0,1,0][btl_openib_endpoint.c:889:mca_btl_openib_endpoint_create_qp]

ibv_create_qp: returned 0 byte(s) for max inline data |

This is caused by an error in older versions of the OpenIB user

library. Upgrading your OpenIB stack to recent versions of the

OpenFabrics software should resolve the problem. See this post on the

Open MPI user's list for more details:

| 24. My bandwidth seems [far] smaller than it should be; why? Can this be fixed? (openib BTL) |

Open MPI, by default, uses a pipelined RDMA protocol.

Additionally, in the v1.0 series of Open MPI, small messages use

send/receive semantics (instead of RDMA — small message RDMA was added in the v1.1 series).

For some applications, this may result in lower-than-expected

bandwidth. However, Open MPI also supports caching of registrations

in a most recently used (MRU) list — this bypasses the pipelined RDMA

and allows messages to be sent faster (in some cases).

For version the v1.1 series, see this FAQ entry for more

information about small message RDMA, its effect on latency, and how

to tune it.

To enable the "leave pinned" behavior, set the MCA parameter

mpi_leave_pinned to 1. For example:

1

| shell$ mpirun --mca mpi_leave_pinned 1 ... |

NOTE: The mpi_leave_pinned parameter was

broken in Open MPI v1.3 and v1.3.1 (see

this announcement). mpi_leave_pinned functionality was fixed in v1.3.2.

This will enable the MRU cache and will typically increase bandwidth

performance for applications which reuse the same send/receive

buffers.

NOTE: The v1.3 series enabled "leave

pinned" behavior by default when applicable; it is usually

unnecessary to specify this flag anymore.

| 25. How do I tune small messages in Open MPI v1.1 and later versions? (openib BTL) |

Starting with Open MPI version 1.1, "short" MPI messages are

sent, by default, via RDMA to a limited set of peers (for versions

prior to v1.2, only when the shared receive queue is not used). This

provides the lowest possible latency between MPI processes.

However, this behavior is not enabled between all process peer pairs

because it can quickly consume large amounts of resources on nodes

(specifically: memory must be individually pre-allocated for each

process peer to perform small message RDMA; for large MPI jobs, this

can quickly cause individual nodes to run out of memory). Outside the

limited set of peers, send/receive semantics are used (meaning that

they will generally incur a greater latency, but not consume as many

system resources).

This behavior is tunable via several MCA parameters:

-

btl_openib_use_eager_rdma (default value: 1): These both

default to 1, meaning that the small message behavior described above

(RDMA to a limited set of peers, send/receive to everyone else) is

enabled. Setting these parameters to 0 disables all small message

RDMA in the openib BTL component.

-

btl_openib_eager_rdma_threshold (default value: 16): This is

the number of short messages that must be received from a peer before

Open MPI will setup an RDMA connection to that peer. This mechanism

tries to setup RDMA connections only to those peers who will

frequently send around a lot of short messages (e.g., avoid consuming

valuable RDMA resources for peers who only exchange a few "startup"

control messages).

-

btl_openib_max_eager_rdma (default value: 16): This parameter

controls the maximum number of peers that can receive an RDMA

connection for short messages. It is not advisable to change this

value to a very large number because the polling time increase with

the number of the connections; as a direct result, short message

latency will increase.

-

btl_openib_eager_rdma_num (default value: 16): This parameter

controls the maximum number of pre-allocated buffers allocated to each

peer for small messages.

-

btl_openib_eager_limit (default value: 12k): The maximum size

of small messages (in bytes).

Note that long messages use a different protocol than short messages;

messages over a certain size always use RDMA. Long messages are not

affected by the btl_openib_use_eager_rdma MCA parameter.

Also note that, as stated above, prior to v1.2, small message RDMA is

not used when the shared receive queue is used.

| 26. How do I tune large message behavior in Open MPI the v1.2 series? (openib BTL) |

Note that this answer generally pertains to the Open MPI v1.2

series. Later versions slightly changed how large messages are

handled.

Open MPI uses a few different protocols for large messages. Much

detail is provided in this

paper.

The btl_openib_flags MCA parameter is a set of bit flags that

influences which protocol is used; they generally indicate what kind

of transfers are allowed to send the bulk of long messages.

Specifically, these flags do not regulate the behavior of "match"

headers or other intermediate fragments.

The following flags are available:

- Use send/receive semantics (1): Allow the use of send/receive

semantics.

- Use PUT semantics (2): Allow the sender to use RDMA writes.

- Use GET semantics (4): Allow the receiver to use RDMA reads.

Open MPI defaults to setting both the PUT and GET flags (value 6).

Open MPI uses the following long message protocols:

- RDMA Direct: If RDMA writes or reads are allowed by

btl_openib_flags and the sender's message is already registered

(either by use of the

mpi_leave_pinned MCA parameter or if the buffer was allocated

via MPI_ALLOC_MEM), a slightly simpler protocol is used:

- Send the "match" fragment: the sender sends the MPI message

information (communicator, tag, etc.) to the receiver using copy

in/copy out semantics. No data from the user message is included in

the match header.

- Use RDMA to transfer the message:

- If RDMA reads are enabled and only one network connection is

available between the pair of MPI processes, once the receiver has

posted a matching MPI receive, it issues an RDMA read to get the

message, and sends an ACK back to the sender when the transfer has

completed.

- If the above condition is not met, then RDMA writes must be

enabled (or we would not have chosen this protocol). The receiver

sends an ACK back when a matching MPI receive is posted and the sender

issues an RDMA write across each available network link (i.e., BTL

module) to transfer the message. The RDMA write sizes are weighted

across the available network links. For example, if two MPI processes

are connected by both SDR and DDR IB networks, this protocol will

issue an RDMA write for 1/3 of the entire message across the SDR

network and will issue a second RDMA write for the remaining 2/3 of

the message across the DDR network.

The sender then sends an ACK to the receiver when the transfer has

completed.

NOTE: Per above, if striping across multiple

network interfaces is available, only RDMA writes are used. The

reason that RDMA reads are not used is solely because of an

implementation artifact in Open MPI; we didn't implement it because

using RDMA reads only saves the cost of a short message round trip,

the extra code complexity didn't seem worth it for long messages

(i.e., the performance difference will be negligible).

Note that the user buffer is not unregistered when the RDMA

transfer(s) is (are) completed.

- RDMA Pipeline: If RDMA Direct was not used and RDMA writes

are allowed by

btl_openib_flags and the sender's message is not

already registered, a 3-phase pipelined protocol is used:

- Send the "match" fragment: the sender sends the MPI message

information (communicator, tag, etc.) and the first fragment of the

user's message using copy in/copy out semantics.

- Send "intermediate" fragments: once the receiver has posted a

matching MPI receive, it sends an ACK back to the sender. The sender

and receiver then start registering memory for RDMA. To cover the

cost of registering the memory, several more fragments are sent to the

receiver using copy in/copy out semantics.

- Transfer the remaining fragments: once memory registrations start

completing on both the sender and the receiver (see the paper for

details), the sender uses RDMA writes to transfer the remaining

fragments in the large message.

Note that phases 2 and 3 occur in parallel. Each phase 3 fragment is

unregistered when its transfer completes (see the

paper for more details).

Also note that one of the benefits of the pipelined protocol is that

large messages will naturally be striped across all available network

interfaces.

The sizes of the fragments in each of the three phases are tunable by

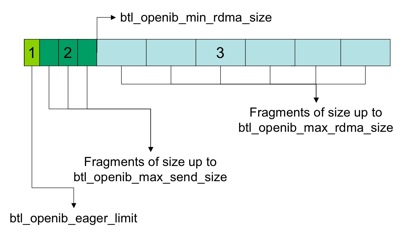

the MCA parameters shown in the figure below (all sizes are in units

of bytes):

- Send/Receive: If RDMA Direct and RDMA Pipeline were not

used, copy in/copy out semantics are used for the whole message (note

that this will happen even if the SEND flag is not set in

btl_openib_flags):

- Send the "match" fragment: the sender sends the MPI message

information (communicator, tag, etc.) and the first fragment of the

user's message using copy in/copy out semantics.

- Send remaining fragments: once the receiver has posted a

matching MPI receive, it sends an ACK back to the sender. The sender

then uses copy in/copy out semantics to send the remaining fragments

to the receiver.

This protocol behaves the same as the RDMA Pipeline protocol when

the btl_openib_min_rdma_size value is infinite.

| 27. How do I tune large message behavior in the Open MPI v1.3 (and later) series? (openib BTL) |

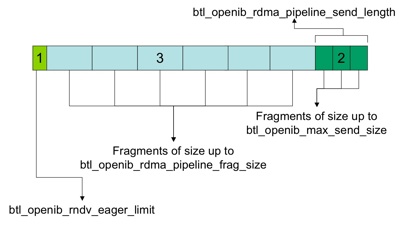

The Open MPI v1.3 (and later) series generally use the same

protocols for sending long messages as described for the v1.2

series, but the MCA parameters for the RDMA Pipeline protocol

were both moved and renamed (all sizes are in units of bytes):

The change to move the "intermediate" fragments to the end of the

message was made to better support applications that call fork().

Specifically, there is a problem in Linux when a process with

registered memory calls fork(): the registered memory will

physically not be available to the child process (touching memory in

the child that is registered in the parent will cause a segfault or

other error). Because memory is registered in units of pages, the end

of a long message is likely to share the same page as other heap

memory in use by the application. If this last page of the large

message is registered, then all the memory in that page — to include

other buffers that are not part of the long message — will not be

available to the child. By moving the "intermediate" fragments to

the end of the message, the end of the message will be sent with copy

in/copy out semantics and, more importantly, will not have its page

registered. This increases the chance that child processes will be

able to access other memory in the same page as the end of the large

message without problems.

Some notes about these parameters:

-

btl_openib_rndv_eager_limit defaults to the same value as

btl_openib_eager_limit (the size for "small" messages). It is a

separate parameter in case you want/need different values.

- The

btl_openib_min_rdma_size parameter was an absolute offset

into the message; it was replaced by

btl_openib_rdma_pipeline_send_length, which is a length.

Note that messages must be larger than

btl_openib_min_rdma_pipeline_size (a new MCA parameter to the v1.3

series) to use the RDMA Direct or RDMA Pipeline protocols.

Messages shorter than this length will use the Send/Receive protocol

(even if the SEND flag is not set on btl_openib_flags).

28. How does the mpi_leave_pinned parameter affect

large message transfers? (openib BTL) |

NOTE: The mpi_leave_pinned parameter was

broken in Open MPI v1.3 and v1.3.1 (see

this announcement). mpi_leave_pinned functionality was fixed in v1.3.2.

When mpi_leave_pinned is set to 1, Open MPI aggressively

tries to pre-register user message buffers so that the RDMA Direct

protocol can be used. Additionally, user buffers are left

registered so that the de-registration and re-registration costs are

not incurred if the same buffer is used in a future message passing

operation.

NOTE: Starting with Open MPI v1.3,

mpi_leave_pinned is automatically set to 1 by default when

applicable. It is therefore usually unnecessary to set this value

manually.

NOTE: The mpi_leave_pinned MCA parameter

has some restrictions on how it can be set starting with Open MPI

v1.3.2. See this FAQ

entry for details.

Leaving user memory registered when sends complete can be extremely

beneficial for applications that repeatedly re-use the same send

buffers (such as ping-pong benchmarks). Additionally, the fact that a

single RDMA transfer is used and the entire process runs in hardware

with very little software intervention results in utilizing the

maximum possible bandwidth.

Leaving user memory registered has disadvantages, however. Bad Things

happen if registered memory is free()ed, for example —

it can silently invalidate Open MPI's cache of knowing which memory is

registered and which is not. The MPI layer usually has no visibility

on when the MPI application calls free() (or otherwise frees memory,

such as through munmap() or sbrk()). Open MPI has implemented

complicated schemes that intercept calls to return memory to the OS.

Upon intercept, Open MPI examines whether the memory is registered,

and if so, unregisters it before returning the memory to the OS.

These schemes are best described as "icky" and can actually cause

real problems in applications that provide their own internal memory

allocators. Additionally, only some applications (most notably,

ping-pong benchmark applications) benefit from "leave pinned"

behavior — those who consistently re-use the same buffers for sending

and receiving long messages.

*It is for these reasons that "leave pinned" behavior is not enabled

by default.* Note that other MPI implementations enable "leave

pinned" behavior by default.

Also note that another pipeline-related MCA parameter also exists:

mpi_leave_pinned_pipeline. Setting this parameter to 1 enables the

use of the RDMA Pipeline protocol, but simply leaves the user's

memory registered when RDMA transfers complete (eliminating the cost

of registering / unregistering memory during the pipelined sends /

receives). This can be beneficial to a small class of user MPI

applications.

29. How does the mpi_leave_pinned parameter affect

memory management? (openib BTL) |

NOTE: The mpi_leave_pinned parameter was

broken in Open MPI v1.3 and v1.3.1 (see

this announcement). mpi_leave_pinned functionality was fixed in v1.3.2.

When mpi_leave_pinned is set to 1, Open MPI aggressively

leaves user memory registered with the OpenFabrics network stack after

the first time it is used with a send or receive MPI function. This

allows Open MPI to avoid expensive registration / deregistration

function invocations for each send or receive MPI function.

NOTE: The mpi_leave_pinned MCA parameter

has some restrictions on how it can be set starting with Open MPI

v1.3.2. See this FAQ

entry for details.

However, registered memory has two drawbacks:

- There is only so much registered memory available.

- User applications may free the memory, thereby invalidating Open

MPI's internal table of what memory is already registered.

The second problem can lead to silent data corruption or process

failure. As such, this behavior must be disallowed. Note that the

real issue is not simply freeing memory, but rather returning

registered memory to the OS (where it can potentially be used by a

different process). Open MPI has two methods of solving the issue:

- Using an internal memory manager; effectively overriding calls to

malloc(), free(), mmap(), munmap(), etc.

- Telling the OS to never return memory from the process to the

OS

How these options are used differs between Open MPI v1.2 (and

earlier) and Open

MPI v1.3 (and later).

30. How does the mpi_leave_pinned parameter affect

memory management in Open MPI v1.2? (openib BTL) |

Be sure to read this FAQ entry first.

Open MPI 1.2 and earlier on Linux used the ptmalloc2 memory allocator

linked into the Open MPI libraries to handle memory deregistration.

On Mac OS X, it uses an interface provided by Apple for hooking into

the virtual memory system, and on other platforms no safe memory

registration was available. The ptmalloc2 code could be disabled at

Open MPI configure time with the option --without-memory-manager,

however it could not be avoided once Open MPI was built.

ptmalloc2 can cause large memory utilization numbers for a small

number of applications and has a variety of link-time issues.

Therefore, by default Open MPI did not use the registration cache,

resulting in lower peak bandwidth. The inability to disable ptmalloc2

after Open MPI was built also resulted in headaches for users.

Open MPI v1.3 handles

leave pinned memory management differently.

31. How does the mpi_leave_pinned parameter affect

memory management in Open MPI v1.3? (openib BTL) |

NOTE: The mpi_leave_pinned parameter was

broken in Open MPI v1.3 and v1.3.1 (see

this announcement). mpi_leave_pinned functionality was fixed in v1.3.2.

Be sure to read this FAQ entry first.

NOTE: The mpi_leave_pinned MCA parameter

has some restrictions on how it can be set starting with Open MPI

v1.3.2. See this FAQ

entry for details.

With Open MPI 1.3, Mac OS X uses the same hooks as the 1.2 series,

and most operating systems do not provide pinning support. However,

the pinning support on Linux has changed. ptmalloc2 is now by default

built as a standalone library (with dependencies on the internal Open

MPI libopen-pal library), so that users by default do not have the

problematic code linked in with their application. Further, if

OpenFabrics networks are being used, Open MPI will use the mallopt()

system call to disable returning memory to the OS if no other hooks

are provided, resulting in higher peak bandwidth by default.

To utilize the independent ptmalloc2 library, users need to add

-lopenmpi-malloc to the link command for their application:

1

| shell$ mpicc foo.o -o foo -lopenmpi-malloc |

Linking in libopenmpi-malloc will result in the OpenFabrics BTL not

enabling mallopt() but using the hooks provided with the ptmalloc2

library instead.

To revert to the v1.2 (and prior) behavior, with ptmalloc2 folded into

libopen-pal, Open MPI can be built with the

--enable-ptmalloc2-internal configure flag.

When not using ptmalloc2, mallopt() behavior can be disabled by

disabling mpi_leave_pined:

1

| shell$ mpirun --mca mpi_leave_pinned 0 ... |

Because mpi_leave_pinned behavior is usually only useful for

synthetic MPI benchmarks, the never-return-behavior-to-the-OS behavior

was resisted by the Open MPI developers for a long time. Ultimately,

it was adopted because a) it is less harmful than imposing the

ptmalloc2 memory manager on all applications, and b) it was deemed

important to enable mpi_leave_pinned behavior by default since Open

MPI performance kept getting negatively compared to other MPI

implementations that enable similar behavior by default.

32. How can I set the mpi_leave_pinned MCA parameter? (openib BTL) |

NOTE: The mpi_leave_pinned parameter was

broken in Open MPI v1.3 and v1.3.1 (see

this announcement). mpi_leave_pinned functionality was fixed in v1.3.2.

As with all MCA parameters, the mpi_leave_pinned parameter (and

mpi_leave_pinned_pipeline parameter) can be set from the mpirun

command line:

1

| shell$ mpirun --mca mpi_leave_pinned 1 ... |

Prior to the v1.3 series, all the usual methods

to set MCA parameters could be used to set mpi_leave_pinned.

However, starting with v1.3.2, not all of the usual methods to set

MCA parameters apply to mpi_leave_pinned. Due to various

operating system memory subsystem constraints, Open MPI must react to

the setting of the mpi_leave_pinned parameter in each MPI process

before MPI_INIT is invoked. Specifically, some of Open MPI's MCA

parameter propagation mechanisms are not activated until during

MPI_INIT — which is too late for mpi_leave_pinned.

As such, only the following MCA parameter-setting mechanisms can be

used for mpi_leave_pinned and mpi_leave_pinned_pipeline:

- Command line: See the example above.

- Environment variable: Setting

OMPI_MCA_mpi_leave_pinned to 1

before invoking mpirun.

To be clear: you cannot set the mpi_leave_pinned MCA parameter via

Aggregate MCA parameter files or normal MCA parameter files. This is

expected to be an acceptable restriction, however, since the default

value of the mpi_leave_pinned parameter is "-1", meaning

"determine at run-time if it is worthwhile to use leave-pinned

behavior." Specifically, if mpi_leave_pinned is set to -1, if any

of the following are true when each MPI processes starts, then Open

MPI will use leave-pinned bheavior:

- Either the environment variable

OMPI_MCA_mpi_leave_pinned or

OMPI_MCA_mpi_leave_pinned_pipeline is set to a positive value (note

that the "mpirun --mca mpi_leave_pinned 1 ..." command-line syntax

simply results in setting these environment variables in each MPI

process)

- Any of the following files / directories can be found in the

filesystem where the MPI process is running:

-

/sys/class/infiniband

-

/dev/open-mx

-

/dev/myri[0-9]

Note that if either the environment variable

OMPI_MCA_mpi_leave_pinned or OMPI_MCA_mpi_leave_pinned_pipeline is

set to to "-1", then the above indicators are ignored and Open MPI

will not use leave-pinned behavior.

| 33. I got an error message from Open MPI about not using the

default GID prefix. What does that mean, and how do I fix it? (openib BTL) |

Users may see the following error message from Open MPI v1.2:

1

2

3

4

5

6

7

| WARNING: There are more than one active ports on host '%s', but the

default subnet GID prefix was detected on more than one of these

ports. If these ports are connected to different physical OFA

networks, this configuration will fail in Open MPI. This version of

Open MPI requires that every physically separate OFA subnet that is

used between connected MPI processes must have different subnet ID

values. |

This is a complicated issue.

What it usually means is that you have a host connected to multiple,

physically separate OFA-based networks, at least 2 of which are using

the factory-default subnet ID value (FE:80:00:00:00:00:00:00). Open

MPI can therefore not tell these networks apart during its

reachability computations, and therefore will likely fail. You need

to reconfigure your OFA networks to have different subnet ID values,

and then Open MPI will function properly.

Please note that the same issue can occur when any two physically

separate subnets share the same subnet ID value — not just the

factory-default subnet ID value. However, Open MPI only warns about

the factory default subnet ID value because most users do not bother

to change it unless they know that they have to.

All this being said, note that there are valid network configurations

where multiple ports on the same host can share the same subnet ID

value. For example, two ports from a single host can be connected to

the same network as a bandwidth multiplier or a high-availability

configuration. For this reason, Open MPI only warns about finding

duplicate subnet ID values, and that warning can be disabled. Setting

the btl_openib_warn_default_gid_prefix MCA parameter to 0 will

disable this warning.

See this FAQ entry for instructions

on how to set the subnet ID.

Here's a more detailed explanation:

Since Open MPI can utilize multiple network links to send MPI traffic,

it needs to be able to compute the "reachability" of all network

endpoints that it can use. Specifically, for each network endpoint,

Open MPI calculates which other network endpoints are reachable.

In OpenFabrics networks, Open MPI uses the subnet ID to differentiate

between subnets — assuming that if two ports share the same subnet

ID, they are reachable from each other. If multiple, physically

separate OFA networks use the same subnet ID (such as the default

subnet ID), it is not possible for Open MPI to tell them apart and

therefore reachability cannot be computed properly.

| 34. What subnet ID / prefix value should I use for my OpenFabrics networks? |

You can use any subnet ID / prefix value that you want.

However, Open MPI v1.1 and v1.2 both require that every physically

separate OFA subnet that is used between connected MPI processes must

have different subnet ID values.

For example, if you have two hosts (A and B) and each of these

hosts has two ports (A1, A2, B1, and B2). If A1 and B1 are connected

to Switch1, and A2 and B2 are connected to Switch2, and Switch1 and

Switch2 are not reachable from each other, then these two switches

must be on subnets with different ID values.

See this FAQ entry for instructions

on how to set the subnet ID.

| 35. How do I set my subnet ID? |

It depends on what Subnet Manager (SM) you are using. Note that changing the subnet ID will likely kill

any jobs currently running on the fabric!

- OpenSM: The SM contained in the OpenFabrics Enterprise

Distribution (OFED) is called OpenSM. The instructions below pertain

to OFED v1.2 and beyond; they may or may not work with earlier

versions.

- Stop any OpenSM instances on your cluster:

1

| shell# /etc/init.d/opensm stop |

- Run a single OpenSM iteration:

The

-o option causes OpenSM to run for one loop and exit.

The -c option tells OpenSM to create an "options" text file.

- The OpenSM options file will be generated under

/var/cache/opensm/opensm.opts. Open the file and find the line with

subnet_prefix. Replace the default value prefix with the new one.

- Restart OpenSM:

1

| shell# /etc/init.d/opensm start |

- Cisco High Performance Subnet Manager (HSM): The Cisco HSM has a

console application that can dynamically change various

characteristics of the IB fabrics without restarting. The Cisco HSM

works on both the OFED InfiniBand stack and an older,

Cisco-proprietary "Topspin" InfiniBand stack. Please consult the

Cisco HSM (or switch) documentation for specific instructions on how

to change the subnet prefix.

- Other SM: Consult that SM's instructions for how to change the

subnet prefix.

| 36. In a configuration with multiple host ports on the same fabric, what connection pattern does Open MPI use? (openib BTL) |

When multiple active ports exist on the same physical fabric

between multiple hosts in an MPI job, Open MPI will attempt to use

them all by default. Open MPI makes several assumptions regarding

active ports when establishing connections between two hosts. Active

ports that have the same subnet ID are assumed to be connected to the

same physical fabric — that is to say that communication is possible

between these ports. Active ports with different subnet IDs

are assumed to be connected to different physical fabric — no

communication is possible between them. It is therefore very important

that if active ports on the same host are on physically separate

fabrics, they must have different subnet IDs. Otherwise Open MPI may

attempt to establish communication between active ports on different

physical fabrics. The subnet manager allows subnet prefixes to be

assigned by the administrator, which should be done when multiple

fabrics are in use.

The following is a brief description of how connections are

established between multiple ports. During initialization, each

process discovers all active ports (and their corresponding subnet IDs)

on the local host and shares this information with every other process

in the job. Each process then examines all active ports (and the

corresponding subnet IDs) of every other process in the job and makes a

one-to-one assignment of active ports within the same subnet. If the

number of active ports within a subnet differ on the local process and

the remote process, then the smaller number of active ports are

assigned, leaving the rest of the active ports out of the assignment

between these two processes. Connections are not established during

MPI_INIT, but the active port assignment is cached and upon the first

attempted use of an active port to send data to the remote process

(e.g., via MPI_SEND), a queue pair (i.e., a connection) is established

between these ports. Active ports are used for communication in a

round robin fashion so that connections are established and used in a

fair manner.

NOTE: This FAQ entry generally applies to v1.2 and beyond. Prior to

v1.2, Open MPI would follow the same scheme outlined above, but would

not correctly handle the case where processes within the same MPI job

had differing numbers of active ports on the same physical fabric.

| 37. I'm getting lower performance than I expected. Why? |

Measuring performance accurately is an extremely difficult

task, especially with fast machines and networks. Be sure to read this FAQ entry for

many suggestions on benchmarking performance.

_Pay particular attention to the discussion of processor affinity and

NUMA systems_ — running benchmarks without processor affinity and/or

on CPU sockets that are not directly connected to the bus where the

HCA is located can lead to confusing or misleading performance

results.

| 38. I get bizarre linker warnings / errors / run-time faults when

I try to compile my OpenFabrics MPI application statically. How do I

fix this? |

Fully static linking is not for the weak, and is not

recommended. But it is possible.

Read both this

FAQ entry and this FAQ entry

in their entirety.

39. Can I use system(), popen(), or fork() in an MPI application that uses the OpenFabrics support? (openib BTL) |

The answer is, unfortunately, complicated. Be sure to also

see this FAQ entry as

well.

- If you have a Linux kernel before version 2.6.16: no. Some

distros may provide patches for older versions (e.g, RHEL4 may someday

receive a hotfix).

- If you have a version of OFED before v1.2: sort of. Specifically,

newer kernels with OFED 1.0 and OFED 1.1 may generally allow the use

of

system() and/or the use of fork() as long as the parent does

nothing until the child exits.

- If you have a Linux kernel >= v2.6.16 and OFED >= v1.2 and Open MPI >=

v1.2.1: yes. Open MPI

v1.2.1 added two MCA values relevant to arbitrary

fork() support

in Open MPI:

-

btl_openib_have_fork_support: This is a "read-only" MCA

value, meaning that users cannot change it in the normal ways that MCA

parameter values are set. It can be queried via the ompi_info

command; it will have a value of 1 if this installation of Open MPI

supports fork(); 0 otherwise.

-

btl_openib_want_fork_support: This MCA parameter can be used to

request conditional, absolute, or no fork() support. The following

values are supported:

- Negative values: try to enable fork support, but continue even if

it is not available.

- Zero: Do not try to enable fork support.

- Positive values: Try to enable fork support and fail if it is not

available.

Hence, you can reliably query Open MPI to see if it has support for

fork() and force Open MPI to abort if you request fork support and

it doesn't have it.

This feature is helpful to users who switch around between multiple

clusters and/or versions of Open MPI; they can script to know whether

the Open MPI that they're using (and therefore the underlying IB stack)

has fork support. For example:

1

2

3

4

5

6

7

8

| #!/bin/sh

have_fork_support=`ompi_info --param btl openib --level 9 --parsable | grep have_fork_support:value | cut -d: -f7`

if test "$have_fork_support" = "1"; then

# Happiness / world peace / birds are singing

else

# Despair / time for Häagen-Dazs

fi |

Alternatively, you can skip querying and simply try to run your job:

1

| shell$ mpirun --mca btl_openib_want_fork_support 1 --mca btl openib,self ... |

Which will abort if Open MPI's openib BTL does not have fork support.

All this being said, even if Open MPI is able to enable the

OpenFabrics fork() support, it does not mean

that your fork()-calling application is safe.

- In general, if your application calls

system() or popen(), it

will likely be safe.

- However, note that arbitrary

fork() support is not supported

in the OpenFabrics software stack. If you use fork() in your

application, you must not touch any registered memory before calling

some form of exec() to launch another process. Doing so will cause

an immediate seg fault / program crash.

It is important to note that memory is registered on a per-page basis;

it is therefore possible that your application may have memory

co-located on the same page as a buffer that was passed to an MPI

communications routine (e.g., MPI_Send() or MPI_Recv()) or some

other internally-registered memory inside Open MPI. You may therefore

accidentally "touch" a page that is registered without even

realizing it, thereby crashing your application.

There is unfortunately no way around this issue; it was intentionally

designed into the OpenFabrics software stack. Please complain to the

OpenFabrics Alliance that they should really fix this problem!

40. My MPI application sometimes hangs when using the

openib BTL; how can I fix this? (openib BTL) |

Starting with v1.2.6, the MCA pml_ob1_use_early_completion

parameter allows the user (or administrator) to turn off the "early

completion" optimization. Early completion may cause "hang"

problems with some MPI applications running on OpenFabrics networks,

particularly loosely-synchronized applications that do not call MPI

functions often. The default is 1, meaning that early completion

optimization semantics are enabled (because it can reduce

point-to-point latency).

See Open MPI

legacy Trac ticket #1224 for further

information.

NOTE: This FAQ entry only applies to the v1.2 series. This

functionality is not required for v1.3 and beyond because of changes

in how message passing progress occurs. Specifically, this MCA

parameter will only exist in the v1.2 series.

| 41. Does InfiniBand support QoS (Quality of Service)? |

Yes.

InfiniBand QoS functionality is configured and enforced by the Subnet

Manager/Administrator (e.g., OpenSM).

Open MPI (or any other ULP/application) sends traffic on a specific IB

Service Level (SL). This SL is mapped to an IB Virtual Lane, and all

the traffic arbitration and prioritization is done by the InfiniBand

HCAs and switches in accordance with the priority of each Virtual

Lane.